Interview by Agata Kik

Valery Vermeulen is a Belgian artist and mathematician, musician and music producer, professor and an author lecturing at KASK-Conservatory-School of Arts in Ghent and a researcher at the Department of Mathematics & Computer Science at the University of Antwerp. Passionate about sound, music, and science from early on, he studied mathematics for his fascination with quantum mechanics and theoretical physics, obtaining a PhD in pure mathematics in the field of algebraic group theory that let him get closer to the underpinnings of superstring theory.

Denying distinction between the disciplines, Valery Vermeulen treats both science and art as fields for human expression and creativity that equally expand our perception of reality, even though in disparate ways. At the Institute for Psychoacoustics and Electronic Music (IPEM) in Belgium, Vermeulen managed to merge his analytical and affective approach and did research in music, artificial intelligence, and biofeedback.

The resulting EMO-Synth project was intended to combine the fields of algorithmic music composition and affective computing using AI. In the form of a live AV show, a system would arouse specific emotions in the participating audience by automatically generating personalised music. The work presented a sophisticated interaction between man and machine that touched the hearts of all those involved.

Musicians who performed live would be directed by a kind of conducting composer computer and artificially generated scores programmed to adapt themselves to emotional reactions. Borrowing techniques from psychophysiology to allow the machine to respond to human feelings, the project was an achievement in sharing the processed and interpreted biofeedback data from AI directly with the public.

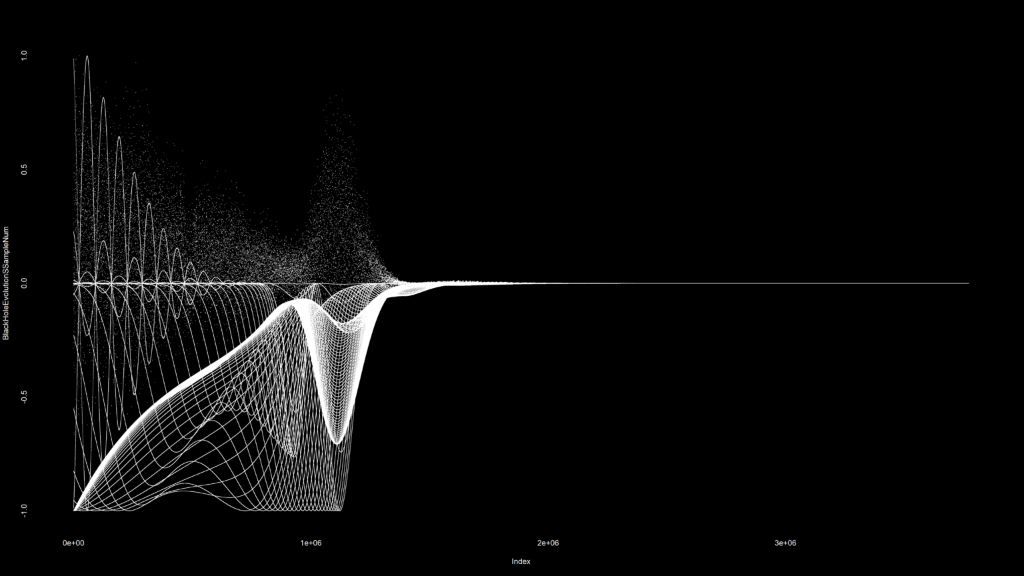

For the most recent project titled Mikromedas, Vermeulen transports us far away from human life on Earth. Using mathematical simulation models from astrophysics and theoretical physics, the artist produces music based on data from black holes in space. The project originated in 2014 and was commissioned by Dutch Electronic Arts Festival. Finally, after seven years, it was released in the form of a music album Mikromedas AdS/CFT 001, on the London-based record label Ash International.

Stemming from data sonification practice, the way the album has been made corresponds to how scientific research also takes place. Translating information into audible figures is also how some discoveries are made. Apart from data sonification, the artist incorporates algorithmic music composition, generative sound synthesis, and scientific simulations programmed in languages such as Python, Octave, R, or RStudio to compose such meticulously calculated harmonics that one can immerse in, having the most cosmic experience of all while listening to this recently released music work.

With his awe-inspiring oeuvre, Vermeulen is a polymath who paves the future of the arts and science at once. At these interdisciplinary intersections, where unknown links are established, the ground turns so much more fertile for work to come about. With technological developments and human understanding increasing, the future can only be even more exciting than this.

You manage to combine mathematics, astrophysics, and art in your practice. Which of these disciplines was your place of departure? Are you a scientist working with art or an artist working with science?

These fields were present from the start of work. In addition, I had a passion for sound, music, and science from early on. Finally, as a teenager, I stumbled across the ideas and field of quantum mechanics by chance in library books. This was also the primary motivation to study mathematics and, afterwards, a PhD.

My musical explorations began even earlier. My parents had a piano in our living room. I spent hours every week improvising on it, discovering the wonderful world of tonal music. I became very interested in jazz and classical music.

As to the art and science dichotomy, I don’t consider myself an artist working with science or a scientist working with art. I don’t believe in a clearcut distinction between art and science. The essential element for me personally is creativity and expression. I would consider myself deeply curious and drawn to music, maths, physics, and the creativity these fields hold. In the various projects I’m working on, I look for new ways to make connections between these fields. Personally, both art and science are all about creativity and expanding your view on reality.

You have just released a new album derived from the Mikromedas project. Could you share more details about the project and the process behind translating deep space data into sound? What challenges have you encountered while working on it?

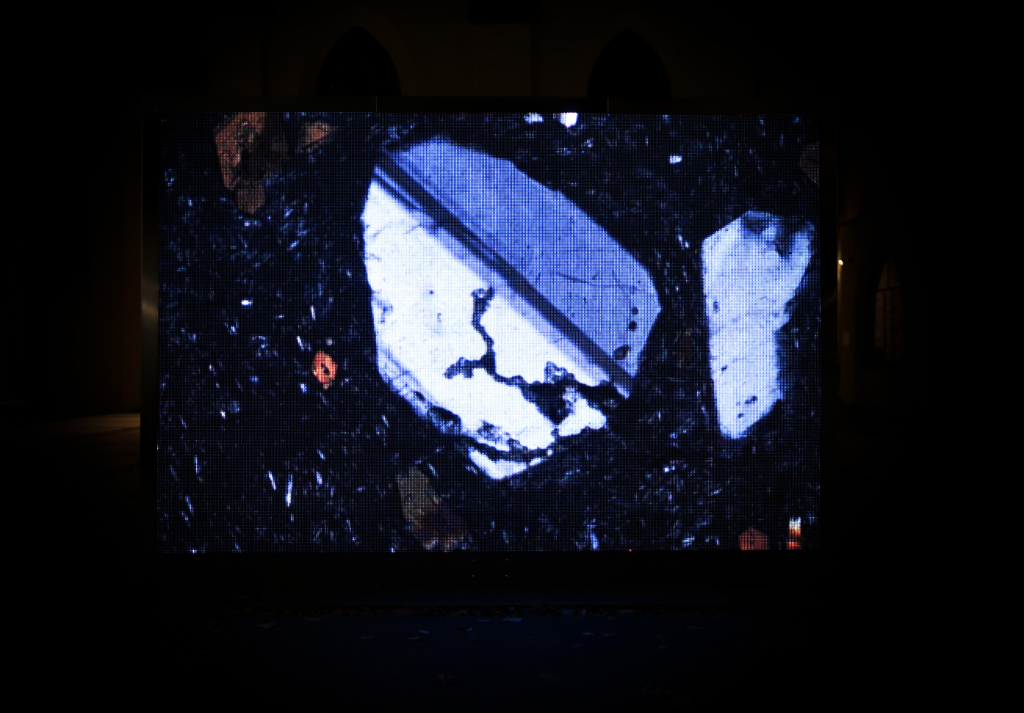

Mikromedas is a data-driven art-science project in which compositions are created using data from space, deep space, and mathematical simulation models from astrophysics and theoretical physics. The project initially started as a commissioned work in 2014 for the DEAF (Dutch Electronic Arts Festival) held in Rotterdam in the Netherlands. Within the Mikromedas umbrella, there are several series focusing on different topics.

The latest album Mikromedas AdS/CFT 001, based on data from simulation models of black holes, resides within the AdS/CFT performance series.

To use and translate data into sound and music, I heavily rely upon a scientific domain called data sonification. Briefly stated data sonification is a field where scientists look for new ways to represent and analyse data using sound. Conveying information in the audio domain, in most cases, unveils unique insights, and even in some cases, scientific discoveries can be made.

Not long after data sonification was introduced, it was also picked up by artists who started to integrate it into their creative practice. Besides sonification, I use other disciplines, such as algorithmic music composition, generative sound synthesis, and scientific simulations programmed in languages such as Python, Octave, R, or Rstudio. And this is also what makes it inspiring personally to work on the project.

There is a lot of experimentation involved in finding connections between fields that are, at first sight, miles apart. One of the significant challenges working on Mikromedas has been maintaining a balance between the scientific and artistic sides of the project.

The more I gravitated towards the field of theoretical physics, the more this became clear. For example, with Mikromedas AdS/CFT 001, I worked quite a lot with gravitational wave data. This is simulation data of the waves produced by mergers of two black holes or another massive object such as neutron stars. For this scientific part of the album, I collaborated with physicist Thomas Hertog, a long-time collaborator of Stephen Hawking.

Using the insights and input from Thomas, I found new ways to translate gravitational wave data into sound waves. First, of course, I had to get into the very technical, mathematical, and computational nuts and bolts. But once you implement the sonification algorithm, it enables you to tweak it to get different sounds out of the gravitational waves.

These sounds will not necessarily be very pleasing to the ear in many cases. This makes it exciting and challenging. The main question is how these sounds can be used for musical purposes. This is then the part where I change my hat to the one of an electronic musician and music producer. Throughout the whole Mikromedas project, finding the right balance between the more scientific and artistic-oriented processes remains a challenge. But this is also what makes it very fascinating, and it has already opened up whole new artistic approaches for which I’m very grateful.

What is the most interesting and most important aspect of translating scientific data into musical experiences for you?

The most important and exciting aspect of translating scientific data into music compositions is that it forces you to develop new ways to approach sound, music, and the scientific discipline from which the data originates. In the case of Mikromedas, producing the album AdS/CFT 001 gave me new insights and approaches to the physics of black holes.

And at the same time, it showed and opened up new sound design, sound synthesis techniques, and sound worlds that I would otherwise have never entered or discovered. I firmly believe that using data for artistic purposes can guide you into new fascinating creative worlds and paradigms.

Also, in the case of Mikromedas, using data for musical purposes means transforming the data into the sonic domain and into the visual world. And this makes it even more compelling and inspiring as you start to make new connections between the sonic, visual, and scientific domains.

The KrystalBall project derived the latest credit crisis data from the financial industry. The econometric statistical techniques were translated into sound-generating software to introduce models into a market system. Have you encountered any events?

The Krystall Ball project was a project that began around the same time as the Mikromedas project. My first experiments gave me insight into using scientific data with science software such as ‘R’ or Python for music compositional purposes. Doing this also opened up new ways of using my experience and knowledge as a data scientist and statistician for creative and artistic pursuits.

So this was something I did not expect to get out of the project right after starting it. However, after having worked on a first EP, it became more apparent that this strategy of using data science software and techniques would also be beneficial for the later development of the Mikromedas project.

EMO-Synth was one of your projects that incorporated affective computing and artificial intelligence. What were your intentions behind its creation? Could you briefly describe its aims and achievements?

EMO-Synth is intended to combine the fields of algorithmic music composition and affective computing using AI. The project aims to develop a system that automatically generates music that directs the user into a pre-chosen emotional state. The domain of affective computing turned out to be of central importance for the project. It is a relatively recent scientific domain pioneered by scientists such as Rosalind Picard at MIT and Manfred Clynes, which focuses on establishing ’emotional’ connections between man and machine.

The idea for the EMO-Synth project dated back to 2006, after I was doing research on music and emotions at the Institute for Psychoacoustics and Electronic Music (IPEM) at Ghent University. About one year later, the project got funding from the Flemish Audiovisual Fund here in Belgium.

This meant I could work with a small team to implement the system and make it accessible to the general audience in the format of a live AV show. In this AV show, we used the EMO-Synth to automatically generate personalised soundtracks for the J. Rupert classic silent movie “The Phantom of The Opera” of 1925. For music generation, during these shows, the EMO-Synth not only used a computer but also incorporated live musicians that are directed by virtual scores generated by the system.

The live AV shows with EMO-Synth premiered in 2009, and we played them in various locations and venues in and outside of Belgium until 2013. The main achievement of this project was that we not only built a prototype of the system that was initially intended but also discovered ways to present it in an artistic context. Because in using AI for artistic purposes, there is always a risk that the output is not easily accessible, understandable, and directly applicable to artistic purposes.

Could you expand on the process of biofeedback? For example, how does a machine identify and respond to human feelings?

The biofeedback process consists of quantitatively measuring human bodily reactions, processing, and using this body data for various purposes. Typical usage could be learning how to control one’s body reactions to improve how to deal with anxieties, stress or negative tendencies. However, for a machine to identify human feelings, it is not enough to quantitatively measure bodily reactions.

As measurements merely produce numbers, additional methods are needed to connect these numbers to human emotions. This can be done using techniques coming from a field called psychophysiology. Psychophysiology is also key to letting a machine respond to human feelings. But how this can be done follows no clear-cut rules. Much is up to the designer/programmer to create technology to make this possible. At the heart of every such technology, there are several components.

First is the measurement of bodily reactions as number streams. So this is, you could say, the biofeedback part of the technology. Secondly, there is the processing and interpretation of the biofeedback data. A key element here is to connect the data to the specific emotional states of the technology user.

And a final component is a system that implements the reaction of the technology-driven by interpreting the processed data. In an artistic context, such a reaction could be, for example, presenting automatically generated audio or visual content to the user of the system to try to alter the emotional state of the same user.

Could you please describe your relationship with artificial intelligence, its limitations and potential uses in artistic work? How do you see it developing into the future, and what are your ambitions and anxieties about AI at the moment?

I think AI is an exciting field with many possible applications from an artistic and creative perspective. Especially the dichotomy between adherents of the strong versus weak AI is particularly important. Believers in strong AI are convinced that in the future, AI systems will be able to feel, think and perceive like a human mind. Contrary to this belief, a group of people supports the view of weak AI. In weak AI, it is generally accepted that AI systems will never be able to demonstrate self-awareness, real emotions, or cognitive human perception.

I count myself more towards the field of weak AI. This is motivated by observing that almost all modern digital computational systems are inherently finite and predictable. And in my opinion, this is not how the human mind, perception, physical or emotions work. I firmly believe that we are unpredictable, chaotic, and hypercomplex living entities like humans.

I’m also convinced that every human creative or artistic process inherits these features of being non-deterministic, fluid, and hypercomplex. And this implies substantial limitations on what can be done with AI for artistic or creative purposes. But this does not take away that many remarkable new technologies, tools, and techniques can be developed with AI.

In a creative and artistic context, I see AI as more like an extension or device that can vastly expand the possibilities of artists. I don’t consider AI as a method that would be able to replace a human artists and the work they create. So for me, AI doesn’t stand a chance to put artists out of work.

There’s a lot of talk about the revolution that deep learning can bring in the future. As a mathematician, I consider this more a natural development in AI, which is driven by ever more powerful computers and refined algorithms. However, we should not forget that the first idea of a neural network, which is at the heart of deep learning, originates from around 1958 by scientist Frank Rosenblatt. A real leap forward, I think, would be made in creating hybrid AI systems that are partly digital and partly biological. Recent experiments in this field could potentially mean a giant leap forward.

In a more general context, AI also holds quite a lot of challenges and possible threats to our everyday lives and society as a whole. Unfortunately, it has reinforced and/or translated existing oppression, discrimination, and inequality systems in the last two decades.

This is something I’m concerned about. Especially as we live in an increasingly technologically driven society where gathering, analysing and interpreting data becomes more important than human interaction and connection. Having worked as a data scientist for over 16 years myself, I feel there’s a huge amount of work still to be done here.

I have no direct concrete plans to start a new AI-driven project. But most probably, AI and machine learning will play an essential role in the further development of the Mikromedas project.

What’s the chief enemy of creativity?

I would consider perfectionism a chief enemy of creativity. I believe this to be true in almost all creative activities, be it developing a new theory in physics, writing code, producing music, or developing a new project. Of course, I do not claim that perfectionism is all that bad. For example, when creating a musical piece, there’s always a balance between when the work is finished and needs further development. To avoid this trap, I sometimes use time constraints in my production process.

You couldn’t live without…

Freedom to think the thoughts you want inside of your mind. With all recent evolutions worldwide and the rise of autocratic regimes, it is more important than ever to defend the principle of being able to develop ideas and thoughts unconditionally and to find and be oneself without any restrictions.