Interview by Laura Netz

Julien Bayle works through art and code. In the age of posthumanism, he deals with matters of interaction between humans and technology, using this one for enhancing processes which he could not achieve without it. He designs programs for creating live AV performances and installations.

The system he is designing now is quite complicated but powerful, where sounds and visuals in real-time influence each other. Along with his most prolific live performances, there is MATTER (2017), which uses a system based on modular machines and a very advanced video player, with both systems connected.

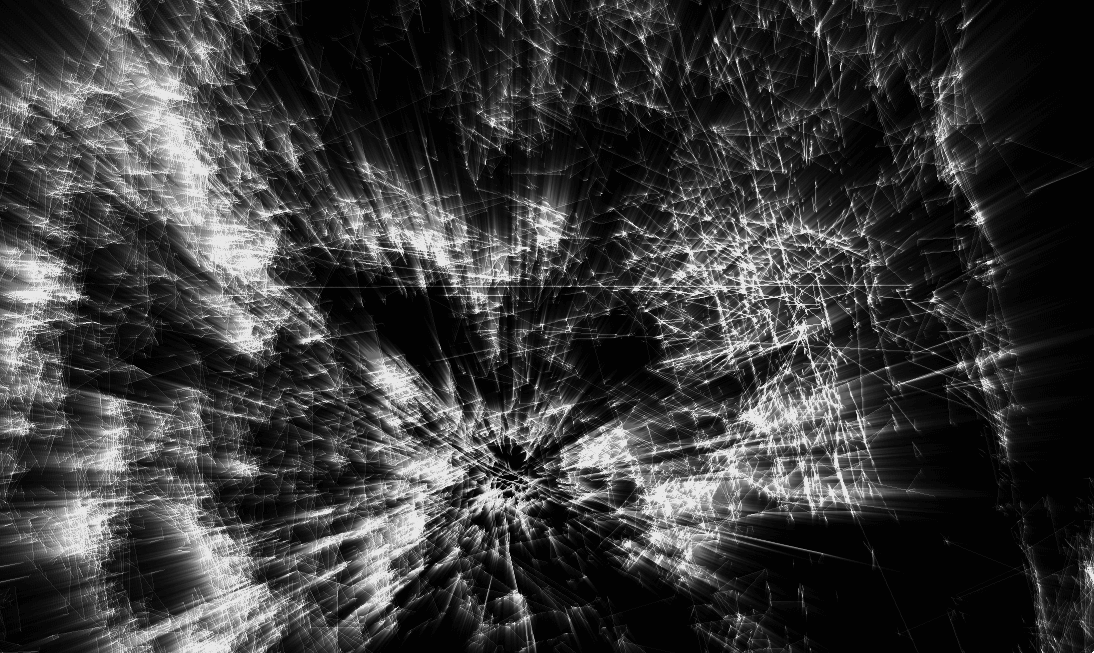

Another impressive work is STRUCTURE (2018), an installation created at MIRA Festival in collaboration with Intorno Labs. It is an immersive installation created with sound, stroboscopic light and smoke where practices of embodiment and perceptual experiences, as well as psychoacoustics, are related to transcendence and the exploration of different sides of perception.

STRUCTURE is about the energy in space and the atomic structures behind things. Immersed and disoriented audiences experience sound dispersion in space through hyper-spatialised 3D sound installation. The result is a generative 3D sound and light installation of unbalanced experiences using different sound trajectories.

FRGMENTS, commissioned by Asia Culture Center in Gwangju, South Korea, includes 360 degrees of video shooting of human faces and sound production of recorded material exclusively from machines, field recording and modular synthesisers. In this work, sound and visuals react in real time in a very complex and dynamic system.

Julien Bayle currently works in STRUCTURE.LIVE, his first project to be a real collision between humans and inert things. The systems in which he is working are pre-programmed and coded but without using any sound or video samples pre-recorded. The project is developed at “Laboratoire de Mathématiques Jean Leray” of the Université de Nantes and has been selected as the “2019 Art & Science collaboration” between Université de Nantes and the Arts & Techs Labo “Stéréolux”.

His recent album, Violent Grains of Silence, is a post-cagean sound aesthetic approach, which results from the process of creating the album in the anechoic chamber of Laboratoire de Mécanique et Acoustique (LMA-CNRS). Interested in Curtis Roads’ Microsound (MIT Press) and granular synthesis, this happened in 2016, and he used composition techniques with Eurorack modular synthesiser only to amplify silence.

Julien Bayle’s work seems intense, vast and exhausting; as a composer, a sound designer, a digital signal processing (DSP) programmer, and a visual artist, the results of his design express the sensitive desire to explore the movement of the sound around the space.

You work through art and code. In the age of posthumanism. How do you deal with matters of interaction between humans and technology? Which is your approach?

I use technology for achieving processes that I couldn’t achieve without it. At first, I wanted technology and technique to be only tools. For instance, I need to illustrate a concept related to tearing, I wanted to use a sound that I could tear, ok let’s use this technique. Just as a tool. But progressively, using techniques drove me to increase my knowledge about the physics of sound, about digital sound and how we could process & alter it in real time. And indeed, the tool and the technology became inspiring.

I recently had a discussion with an Art & Design School Director who was saying to me that “I was also interested in technique and not only in concepts” and it was like a kind of academic blasphemy, sure I was and I am. Actually, a lot of Art Schools in France are still thinking about these kinds of things. Anyway, the world, nature, humans and everything could inspire me.

I think, as a hypersensitive person, that I can collect feelings, emotions, ideas, and concepts around me, as an antenna. I feel like a kind of transducer who converts a specific kind of energy into another kind of matter which is sound, or visual. I’m just an antenna coupled with a transducer.

Post-humanism philosophies picture a very dark future. If I’m not that pessimistic, I’m kind of sceptic and dubitative about the fact humanity could go back and choose another way that could drive it to be less fragmented than today. I feel irreversibility. We have to “deal with what we did”. I feel close to Mark Fisher when he wrote about Lost Futures.

The critical limit point in the timeline (probably created by the fact technology accelerated so much that we couldn’t have been fast enough to think about it) marked a new stage in humanity: this is a kind of loop endpoint in which we will bump forever, trying to reproduce and live again (and forever) something we already lived. It reminds me of the concept of an infinite and inevitable loop. We are trapped. I know that’s dark.

As someone who wants to transduce my feelings about this, about this infinite and painful wall bumping, I want to feel free to use technology or not, I want to enslave technology to me and not the opposite. It is my kind of resistance, probably a weak one. But I want to constrain technology to do specific tasks I choose. I don’t want to think about something I want to create through technology only.

For instance, my current setup doesn’t really have a middle or a centre. Everything could be the centre. I don’t want to use one way, but many and I struggled with that because a lot of software (tools) bring us to use always the same way. I’m an Ableton Certified Trainer but I’m not using only Ableton Live. I’m using many frameworks even if Max8 (formerly Max MSP) is my main one, and I need meta-software like Max because it provides me with a way to create software.

It is a software development environment. So, it is like having matter you can assemble, and combine into new tools. This is a meta-tool that provides me freedom. Yes, tools have to provide you with more freedom. As soon as a tool, as techniques provide more freedom, I can use it.

Your work Structure is an immersive installation created by sound, light and smoke. Are you interested in embodied experiences? Is your work related to transcendence? Are you exploring a different side of perception?

STRUCTURE.INSTALLATION is about the energy that populates and fills a space; the one that is in between, between humans, between humans and buildings, between atoms. I tried to create tension in the space using both sound & light elements, smoke having created and represented the space-filling. I wanted people to be immersed in dense matter.

STRUCTURE occurs at a special moment in my life, and, as for all of my art projects, this moment coloured and loaded my artwork and probably embodied more of myself into the piece. I really feel so close to that piece that I can imagine people experiencing it as if they were walking into my mind. We can consider this work as related to transcendence in the sense of “a work that is me, outside of me”. When we installed it, and I tweaked the sound, and the light, I was floating between my own body and my own feelings which were outside of me; it was very deep and emotional for me.

Perception and limit of perceptions are concepts I’m trying to address in my whole art. As I with Laboratoire de Mécanique et Acoustique – CNRS in Marseille, I always have been interested in perception and psycho-acoustic. The limits. Which are the borders of things we can perceive and things we cannot. STRUCTURE.INSTALLATION is also about this.

Some sounds are travelling into the room, and sometimes you can feel the energy in the opposite corner of the piece but you cannot hear it well. This is volume perception that is addressed in that case. Some other sounds are travelling so fast that the simple fact they are moving creates a kind of amplitude modulation and then, we can perceive weird overtones (which are like new frequencies only appearing because of the movement-based modulation and which are not in the original sound source I’m generating).

This is also something induced by the space (and the movement) itself. Something that we can perceive, but which is like a spectral sound (in the sense of spectre, ghost). STRUCTURE.INSTALLATION also plays with the frequency spectrum by splitting the whole audible spectrum into different bands. Each band are more or less perceivable by people and I also work for splitting these different parts of the same sound source into physical spaces.

It produced sound accumulation into different parts of the 3D space as if I was pouring sounds here and there, making some kind of metallic rain at some point that was falling down to a very low frequency based carpet of sound which was located near the ground.

The system by Intorno Labs I used for producing STRUCTURE.INSTALLATION provided me with a very easy way to spread and locate my sources in the exhibition physical space in Fabra I coats, where MIRA Festival occurred. The space itself seem to produce the sound, the smoke was diffusing the stroboscopes light as if the space itself was dense and full of energy.

This is that energy I relate directly to the feeling I wanted to express. I frenetically wanted the audience to be… immersed inside my feelings, feeling lost, and disoriented as feeling hugged by the dense clouds and the low-frequency sounds.

Actually, with FRGMENTS, STRUCTURE.LIVE is my most personal work, my most emotional art piece to date. Things are already and will be a bit different forever, after these.

You mention you are working on a new project STRUCTURE.LIVE. Are you creating an AV piece where dance and performance are integrated into sound and visuals? Which are these new elements included in your work? Can you develop the concept of a real collision between human & inert things?

From the beginning until about 2017, I was working with systems which were like “generating everything from scratch”. My programs made with Max8 or other programming frameworks like Processing, and OpenFrameworks, were generating everything without using ANY sounds or elements from outside of the computer.

During my lectures or discussion with the audience, I used to say that my computer (and I?) was like curled up on itself. Equations, algorithms inside the computer, as many other dynamic processes, were generating everything; my systems were pre-programmed/coded and controlled and constrained by myself, but without using any sound or video samples pre-recorded.

It was very important for me, to have total control. This absolute need for control was driving me to choose to record the process (the code) which was able to produce sound and visuals instead of recording the sound & visuals rendered themselves. I was more interested in potentiality than in the final rendering. Again, it was that thing about keeping control, not closing any doors, and keeping all ways possible until the end. It was like a way to keep me away from choosing…

Progressively, I had to start to assume more about what I was doing, and what I was living in my personal life too and I started to choose and decide more easily and radically, I wanted to write more, to record the final rendering even if I was still (and I am still) using algorithms for rendering this. I used to say that I started to assume the past deeper and also to assume the eventuality of “not being able to change some facts”.

This is when I started to do a lot of field recording, and sound recording in the studio. This is the moment when I started my project FRGMENTS, commissioned by Asia Culture Center in Gwangju, South Korea and co-produced by my studio and the art centre itself. This project was a turning point for me. All visuals are coming from a pre-shooted series of footage we did in my studio.

People have been filmed with a 4K camera. And all videos have been done using a specific protocol in which we were making people rotate for capturing 360° view of their faces. Sounds have been recorded exclusively from machines, field recording and modular synthesizers. This is a common point in all my works since the beginning.

These raw sound & visual matters have been used as the root of my FRGMENTS installations & live performance. In both forms, the system (and the system and I, in the case of the live performance form) is mangling and altering the sounds; the visual system is programmed for listening to the sound signal in real-time and to react in one way or the others.

Depending on how I decided to connect the sound to visuals at each time, in each installation context or during each “song” in my live performance. Sometimes, a specific sound descriptor (like the noisiness) is influencing the brightness of the picture, and for another context, this same sound descriptor is more linked to the way the video is fragmented. This is a very complex and dynamic system programmed in the studio.

I think STRUCTURE.LIVE is the work in which I will inject more from physical realities, more from humans into my systems. Dance is not directly a part of the project. As in all pieces, I seek for filling time & space. I often use graphical analogies for illustrating the concept of time: I talk about “space” for defining time intervals. My work is about populating space (and time) with elements; in that way, it is very related to architecture and design.

So, as dancers are populating space & time with movements, I started to work on prototypes that could involve them and capture their movements. I’m currently working on the computer system itself, the one that will spread and deploy the audio-visual matter, the core of the piece.

Production time will be divided into mainly 3 parts: sound composition, visual system programming and human motion capture. I’ll use Kinect & another kind of system that can capture the inner static structures of rooms and corridors. I want to inject reality into the computer world. I want to show how systems can try to represent reality, and how computers could frenetically try to imitate us.

Sampling reality is still something new for me. I want to use it in my visuals here and distort it on the screen as if the computer could show us how it could understand and feel about us. Imperfections and failures are part of us. Humans want computers to learn so much about us that they could imitate our own weaknesses.

In STRUCTURE.LIVE, I want to show visuals that will be sometimes real mayhem and chaos as if I wanted to show how much I feel human and how much I don’t want the computer to feel anything. The only important experience for me is the sensitive experience. It is the thing that will keep us as humans.

Technology provides me with ways to keep the sensitive experience at a high level of immersive. By working with Intorno Labs on STRUCTURE.INSTALLATION, I have been lucky to find experts that could put some tools in my hands that I could use for designing a generative 3D sound & light installation without even thinking about the technical layer (layout) of the exhibition room in the end. Basically, I have been able to work and only think about how I wanted to spread my sounds in the space and to keep the work only in that territory without any technical sound system considerations.

A source can be virtually placed in a position x,y,z in the room with a specific diffusion factor. If the room is circular, a dome, a rectangle with more powerful speakers at the ceiling than at the floor, their tool was dealing with that and made exactly the same rendering of my piece everywhere, in different speakers configurations. Then, with this tool, I could have let go of all creation time into creation time: I played with sound trajectories.

For instance, I was filling the space using different trajectories for my sound sources: one source was statically lying on the ground and another one was circulating in the room doing kind of climbing spirals. In another context, I was making all sound sources move chaotically, populating the room in a very random way. I had a context in which I was creating a kind of wave of sound progressing from one side of the room to the other one.

It was very disorientating, making the energy of the space very unbalanced. All these trajectories are ways of drawing things in the space of the room. All these virtual paths for my sound sources were like following specific curves generated by parametric equations. This is where the parametric design could be observed in this work.

I’d like to push the concept further and create sound trajectories from real 3D buildings model. Maybe it could be the next version of STRUCTURE.INSTALLATION. Actually, I’d like to create a sound & sculpture exhibition with multiple rooms in which I could exhibit concrete sculptures representing a specific sound in each room and with a very high spatialized context for the sound in each room too.

That would be like making the sound even more tangible. Something between architecture and sound installation. MaMO in Marseille, France, would be an ideal place for that.

What are the biggest challenges of developing and producing Structure.Live?

The system I’m designing is quite complex. As visuals are based ONLY on real capture of the physical and tangible world, represented by a human moving, standing or statically mimicking a statue, I need to design a system able to grab this matter on the fly, to process it on the way I want and to display it.

It is like taking a piece of reality, and twisting it, to distort it to make it more as I’d like it to be, or more as I think it is from my point of view. When I’m saying it is my most personal work to date, it is about that: processes and analogies related to my feelings to the final pieces are shortened, I’m like directly tweaking, changing the reality itself while I’m changing visuals.

I’m currently coding the part of it that will deal with and display visuals. I’m using still Max8 for this part because there is no software that could render exactly my feelings here. Already made software way too simple and not flexible enough for me. This is why I’m designing a system, again. It is hard at some point. Not because of underlying techniques, but more about the global design.

The system has to be powerful for expanding territories of expression, but not too much complex because I need to be able to use it intuitively when I’m composing and designing with it. This is crazy to design a whole system and then start to create it. Actually, some parts are so easy to code for me, now, that I have very intuitive habits and even on such advanced projects, I’m now more focused on the final rendering and how it can match or can’t match my expression, my purpose.

The biggest challenge here is about doing everything in the global same process. I’m designing sounds, I’m assembling some of them as “almost pre-written sequences” but keeping the flexibility to play them in many different ways; I’m designing the visuals system, and also the system that will analyse the sound in real-time that will feed the visuals for influencing them.

This is very involving, intense, huge and exhausting. It looks like I had to keep the whole thing very consistent, coherent and dense, in order to keep the energy and the meaning of what I want to express very high. It is so important I can be involved in each part. I could code visuals without even knowing about the sound, about the feelings I want to express through the sound fields generated.

Sometimes I don’t know if I’m a composer, a sound designer, a digital signal processing (dsp) programmer, a visual artist or just someone who wants to express so many things that it requires this technology for extracting all feelings I want to spread out of my mind, out of my body.

It seems that your noisy compositions are influenced by Xenakis granular synthesis. Does your recent album Violent Grains of Silence have a post-cagean sound aesthetic approach? Can you tell me a little bit more about the process of creating the album in the Lab?

I have been interested in Granular synthesis and pulsar synthesis as soon as I read about that. A book that also changed my approaches related to these fields is Curtis Roads’ Microsound (MIT Press). It requires some engineering level in sound and acoustic, but it wasn’t an issue for me. It has illustrated a lot of concepts that I could have crossed intuitively.

For instance, the idea of considering sound as a space, as a territory I could explore. I can fly over the territory, stop, zoom, and seek details in a particular place. Granular synthesis (or re-synthesis) is about using small bits, small elements of the sound and looping them more or less fast, spreading them into the stereo space, and changing their pitches.

Sampling reality, real-life sounds, ambient and environment sound, and machine sounds are now something very natural. I like to use these sounds previously recorded as raw material for further processes. Granular synthesis is one of them.

It is a way for me to zoom into sounds, to take some micro-fragments of sound (which represent some micro-fragments of time) and to stretch them, re-order them into another matter, we can cut, re-assemble, glue and spread, filter and enhance or degrade.

During 2016, I had some hard times in my personal life and I wanted to dump all these feelings outside of me, to plunge these into a different matter outside of my body. I choose to immerse myself inside one of the quietest places in the world: the anechoic chamber of Laboratoire de Mécanique et Acoustique (LMA-CNRS). In that space, I feel something special.

A friend of mine came with me but she couldn’t stay inside for more than 20min. Actually, I felt comfortable during the hours. One of my thoughts about how comfortable is the fact that I was keeping a lot of noises inside my mind for personal reasons and that these noises were like compensating for the absence of any noise within the room.

I was there for recording things. I didn’t want to produce any sounds myself from my mouth or with my systems. I thought a lot there. And then I ended by recording the silence, the void. I placed 2 Neumann microphones in the centre of the room, I closed the door and I recorded that for 2 hours. I didn’t want especially to talk about the fact we cannot record the silence because when we are doing that we disturb the space itself in which we are recording, but it ended with that too.

It was like being so involved in something that we couldn’t even distance ourselves from this. Like if there was no way for me to distance myself from the thing I wanted to understand the most and for which I needed distance. The result was a 2 hours WAV file of the void. But not a random void. The void of this particular moment, the void of this end of July 2016. I processed this sound recording by normalizing it, brutally.

It resulted in a full 2 hours noisy file. It was hard for me to consider it as a specific noise and not just noise. Then, I cut it into specific pieces with a specific duration representing a specific moment of the 2016 year (I basically translated the date format day month year into a duration that gave me the duration I had to use for each track).

Then I started the releasing and relieving process of composition with my Eurorack modular synthesizer only. I needed to improvise my patching on the fly, to prepare something without any constraints, intuitively. It was a part of the discharging step of my process. And I did one patch for each track. I was using the slices of amplified silence sometimes directly in the sound chain and sometimes as a modulator.

In the first case, it means that the noise itself can be heard, eventually heavily processed and modulated but it can be heard. In the second one, I generated some textures used as sound sources and I used some sample & hold techniques with my noise recorded to modulate these sources. The noise is recorded as a sound source, or as a modulator.

It reminds me of a sound recording I released on ETER LAB, a South American sound label I really like: Void Propagate. In this one, I used the same kind of technique of amplifying the silence. I have used only one reverberation sound effect and I was injecting nothing but a flying wire into it.

Basically, I had connected a wire to its input and this wire was not connected to anything on the other side. This module (Erbe Verb by MakeNoise) can easily go into a kind of feedback loop depending on how you use it and I was every time looking for this border when I was live recording the piece. It was again the same thing with silence.

In both pieces, it was this idea of using the environment (the anechoic room and the microphones and their preamps, or the reverberation effect) as something more important than the signal itself. Actually, there was no signal, there was silence. I felt close to the lowercase musical genre with these 2 releases.

The silence was complicated for me, a long time ago. It was meaning: death. It became something different when I met a very close person that was using silence as a way of communicating. I understood that silence could be a signal.

You have been collaborating with various labs, LMA, Intorno Labs. How do you think the labs are a transformative tool towards the transdisciplinary arts and sciences?

I’m collaborating with Laboratoire de Mécanique et Acoustique for several years. I didn’t have real residencies there but I had in some other labs and it provided me with a lot of discussion and ideas. Actually, researchers and artists have always been close.

Both are researching, representing concepts, trying to translate the world into other languages that are more intelligible, trying to make abstract concepts and invisible things more concrete and understandable, or just more tangible for others. These are already a lot in common.

If I often had collaborations related to tools and how we can design tools, the most interesting were and are: discussions. Discussing with researchers is inspiring in many ways. At first, these discussions are opening new doors of knowledge. This is the first level.

It provides a lot of new ideas in our own creative practice. Then, at least in my case, these also drive me to make more bridges between concepts, to associate more nodes of ideas I already have, to make a kind of cluster of items in my head and very often I feel more relieved after the discussion because I have my mind clearer.

Labs are proposing more and more art/science residences. I’m very interested in trying to work more with labs myself but in more long-term ways. This is currently hard to get into this kind of collaboration except if you do a PhD, which is also hard to fund.

I really think collaboration with labs can drive me into digging deeper into my research but the collaboration has to keep a lot of room for freedom. In many propositions, there is something already planned from the beginning to the end and we, artists, need to have time and floating moments.

STRUCTURE.LIVE requires some science related to how we can display and visualize a set of points in 3D spaces. This is related to point cloud techniques and I have been invited by Stéréolux and Laboratoire de Mathématiques Jean Leray in Université de Nantes for proposing a workshop for students. I started to lead it with a mathematician researcher in the lab.

The topic is related to the visual part of my incoming live performance. During the workshop time, I’ll have a specific residency there in Nantes and I’ll make all my captures that will result in meshes. These meshes will be the raw matter for my visual artwork for the piece. In this case, Stéréolux and University provide a lot of room for freedom. This is the perfect case in which I really feel comfortable working on a project I want to produce and teach.

You have worked internationally with institutions such as SAT Montreal. What did you develop there? Do you have any future collaboration coming soon?

At Société des Arts Technologiques in Montréal, I designed a site-specific live performance improvisation system. It was based on my Eurorack modular machines for all sound sources and a software-based system for all sound spatialization. I didn’t use any existing system but assembled state-of-art HOA Library objects in Max/MSP.

The core of the project was John Chowning’s quote: “We sense the space”. The main idea was: to generate sounds, make them move in the room and use the spherical environment itself as a resonator, and a modulator for the sound.

I was generating a sound with a static frequency, and I was making it rotate so fast that we could have heard some new harmonic appearing; kind of overtones like in amplitude modulation-based synthesis. It was really interesting and unusual to generate sounds and modulate them by using the space itself.

Visuals were based on the cube map technique and with my assistants, we aimed at “breaking the sphere” geometry by displaying grids, and cubes with this technique. Results have been quite interesting but I didn’t enjoy the spherical environment. It forces us to build the whole piece for the centre and I don’t really like the idea of being able to enjoy the piece only from one point (except proper and beautiful anamorphosis…). I’m not completely convinced by all these domes appearing everywhere. This is too much artificial compared to reality.

I love building, room, squared or not. And I’d prefer to make installations, live performances, even immersive ones, in real buildings. This is more me as an artist changing the reality than me as an artist changing something that doesn’t exist except for my art. I need to bump into reality if I want to alter it.

I don’t have any collaboration currently running with any art centres. I’d need to have some as this can be a nice framework for me to produce art.

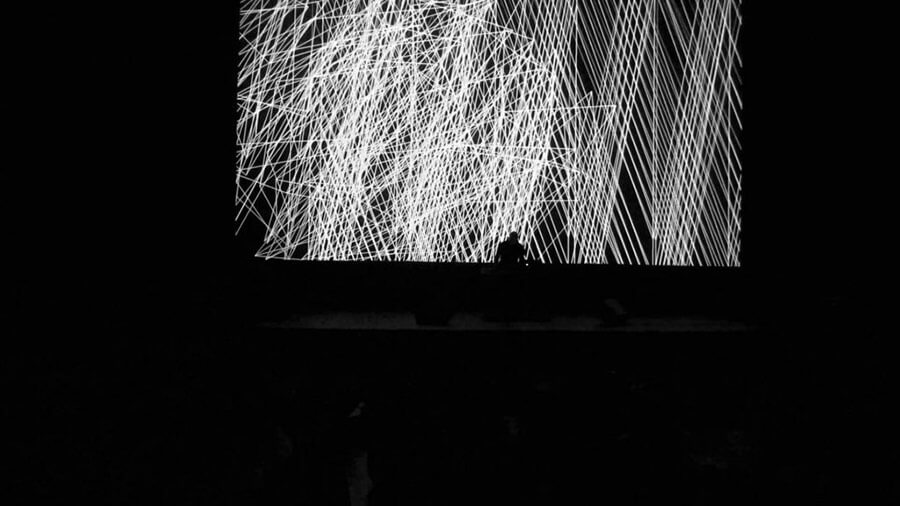

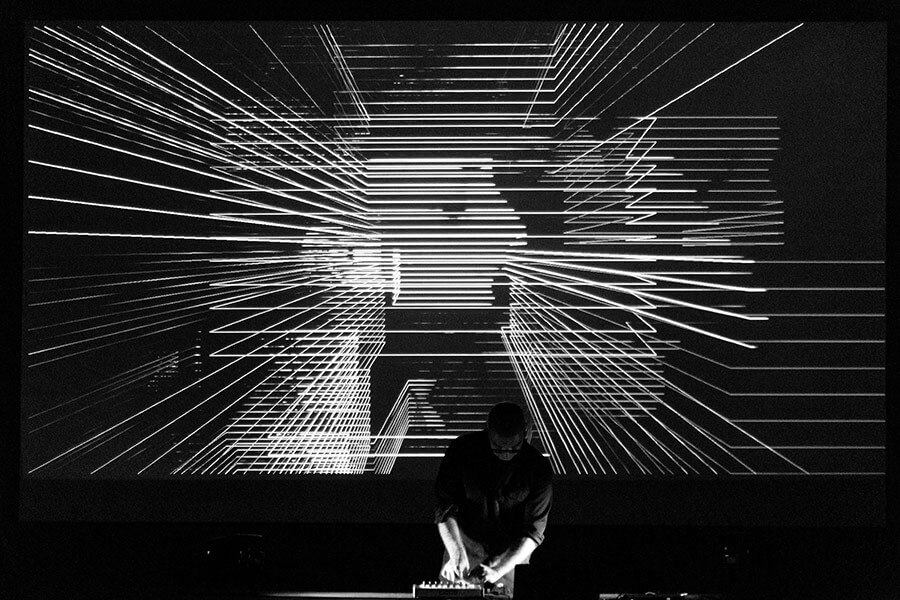

However, I’d really like to launch the next step of MATTER. MATTER was a so nice and deep project with my friend and artist Sebastian Sarti: We only played it one time, that’s a real shame. We built a system based on modular machines on my side and a very advanced video player I designed for Sebastian. Both systems were connected.

His system was receiving some pulses from my system (clocks, voltage variation related to some sound modulators I was using) and my system was receiving some ‘pulses’ genially placed by Sebastian in specific frames of his video. We didn’t know when the other’s system would have emitted his pulse and each pulse was like an influencer for our system.

So, when a bubble was exploding in a playing video, my modular system was receiving a pulse… It was up to me to use it here or there to change the sound. The best change was to connect it to my reverb module. The pulse was like “bumping” the reverberation space making a kind of VIUOUSSSSSHHHHHH resonating. It was VERY dark and deep. Sebastian’s videos have been shot by him with very DIY equipment. The video shown reflects quite well those concepts: We are currently looking for a residency program and some funds to make a new version.

Your chief enemy of creativity?

While I’m designing sounds, visuals, and systems, sometimes I can’t choose, and it drives me to keep things open and it kills me. Keeping everything open means you don’t write, you don’t create. This is related to keeping the system omnipotent. Omnipotent means that it can do everything, and it often equals to: it cannot do anything.

The flow of ideas, of images in my mind is so huge, big and sometimes violent that I need to sort, drop, cut, and decide in order to write something, I mean, in order to deliver something, to render some pieces.

This is sometimes hard, but I want to leave a trace with my pieces so I have to do this to keep the creativity flowing until the rendered form.

You couldn’t live without…

I could write a big list. And I’ll keep it to things because there are many humans without who I couldn’t live. I think I’ll only write on my computer. It sounds weird and dependent, but with it, I can create. I can create. Of course, I’d miss my sound recorder, and my machines, but If I had to keep only one thing, I’d choose this one. I can design visuals, write, and use big memory storage.