Text by Kyle Dent, Richelle Dumond & Mike Kuniavsky

Because of its incredible power, the unexpected consequences of AI in all its forms, from simple pattern recognition to complex planning, prediction, and reasoning are particularly alarming. It intersects with our lives in ways we may not even be aware of. While AI technology has done many amazing and useful things, it has failed us significantly. In our labs at the Palo Alto Research Center (formerly Xerox PARC), we have developed a process to mitigate the unforeseen and harmful impact of the technology we design and develop. For example, some of the challenges AI poses when trained on biased data are clear.

Others are unforeseen, and we may not even know about them until after they have been broadly deployed. Unfortunately, we do not have a ready-made solution that can automatically solve the ethical issues of AI, but we have developed a review framework for ourselves and others. Those of us designing and developing AI-driven experiences can use it to evaluate the potentially negative implications of what we do and allow us to determine the specific dangers of our own work.

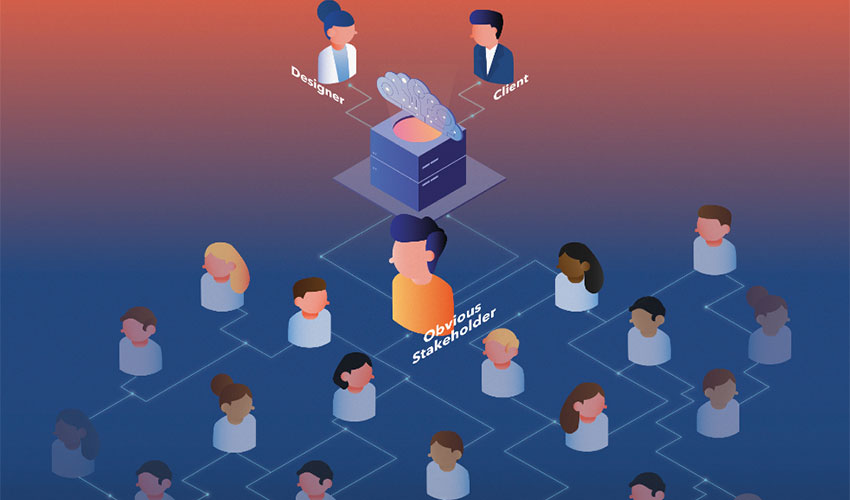

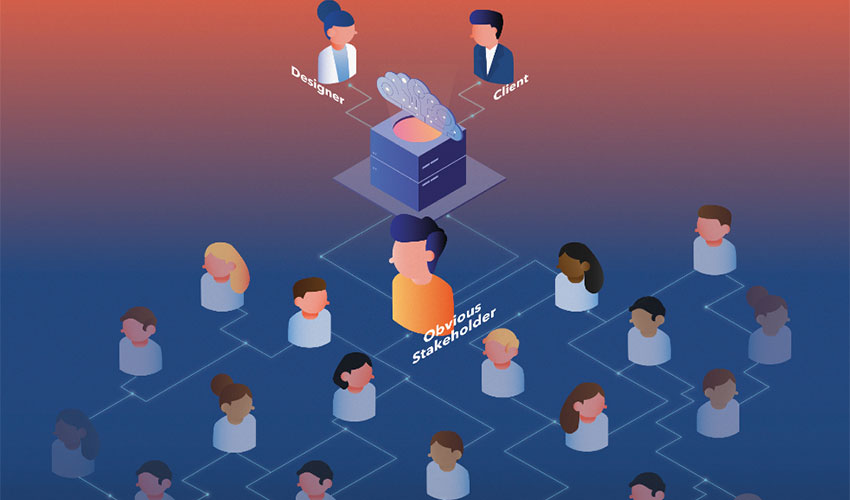

We became interested in understanding the impact of our work over the past couple of years as we have seen news reports about harmful failures of AI and how it has been used to propagate many of society’s historical biases. We noticed that nowadays, people put a lot of trust in technology and erroneously believe in its inherent neutrality. We realized that it was incumbent on us and our colleagues to understand the risks we might introduce through our work. Among our highest priorities is transparency. When we identify specific risks or make assumptions, they need to be surfaced and communicated to all stakeholders, including those affected by the AI. Technology development is a continual negotiation between the creators of the technology.

These organisations sponsor the work and end-users who are the consumers or targets of that technology. There is never just a single ethical decision to make in developing a specific technology. Instead, a series of decisions about the focus, scope, transparency, and experience of a technology appears throughout a project that requires ethical consideration, deliberation, discussion, and consensus.

Many technology companies have recognized the potential for harm from AI and have published guidelines for their employees to read. We think that guidelines and checklists are insufficient. In general, simple rules and lists of values don’t go far enough. It’s not just a question of standards but processes: design review, user research, and data collection.

Processes must include oversight by groups with diverse backgrounds and transparency around data collection and design of algorithms. The design of systems must include the ability to interrogate them with clear mechanisms to control them. Internal processes should incorporate warning signals as procedural fuses highlighting when development is headed into dangerous areas and specify what happens if a process spiralled out of control.

We believe that there should be an AI design governance model for medical research, chemical plant processes, high- way driving, and many other everyday practices. Projects must have the ability to understand and shape who participates, including the data scientists, social scientists, organizational and non-organizational policymakers, and designers of all sorts. The goal is not to slow down development with the additional bureaucracy but to have thought about what kinds of things may get broken when moving fast, what the plan is to identify when they’re breaking before they’ve broken completely, and how to fix them before they’ve broken irreparably. Our process borrows from other similar approaches like Institutional Review Boards (IRBs), participatory design, design ethics, and Computer Professionals for Social Responsibility (CPSR).

There is an active, ongoing conversation about the ethical implications of AI both in the media and in academia with several recent conferences and workshops organized around themes related to AI for social good. The different perspectives at these gatherings tend to consider ethics and AI in one or two ways: either as a technical challenge (the problem of incorporating moral decision-making into intelligent agents) or ethics as a way to rein in a disruptive and powerful societal force (displacing workers, for example). These are indeed worthy discussions within the AI community. Still, apart from the topic of bias in data, there is very little discussion about the current and immediate harm from intelligent systems and how it should be practically addressed today.

Many discussions of ethics imagine a future state where highly sophisticated AI agents fully participate within human spheres; however, currently deployed AI technology is much less sophisticated than that ideal, yet it still has harmful effects on people and society. For the most part, developers’ priorities are elsewhere. We have not only been lacking in our responsibility to imagine all the ways AI could impact the world around us, we have caused harm by not being critical of the risks that our AI systems can inflict.

Ethical frameworks enforceable by organizations such as those we propose are not without precedent. One that most closely resembles and inspired our approach is described in the Belmont Report, which was created by the U.S. government. The report discusses and presents ethical principles and guidelines for the protection of human subjects of federally funded research studies. Several well-known incidents led to the report’s writing, primarily the Tuskegee Syphilis Study, which was run from 1932 until 1972 by the U.S. Public Health Service. The study is now notorious for violating ethical standards as researchers knowingly withheld treatment from sick patients.

It is a tragic irony that, most likely, the Tuskegee researchers and others like them convinced themselves they were doing good. For those who might have been less assured, a brief and misguided moral calculation concluding with some net benefit to society no doubt satisfied any concerns. AI researchers and designers now find themselves in a similar situation.

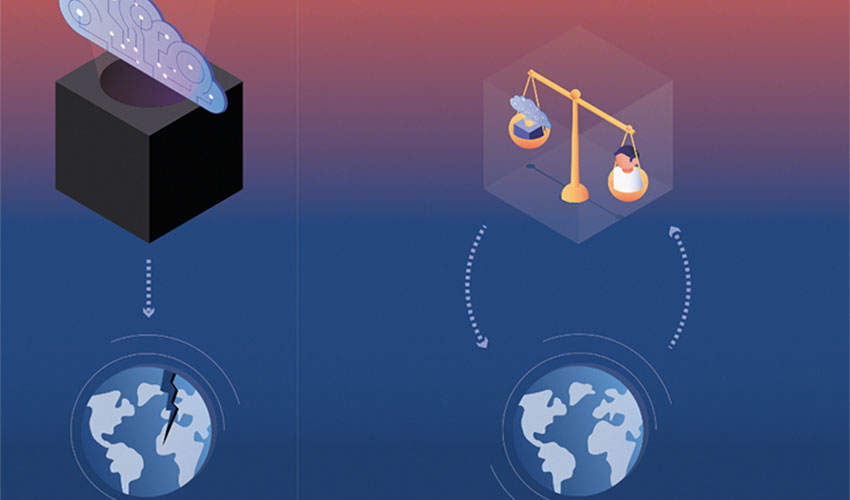

The tremendous advantages of AI-powered technology are covered regularly in media reports. Less visible are the negative consequences that have already been seen with very little thought about the potential for other harm. In the current AI design culture, even well-intentioned practitioners demonstrate an inability to understand their own responsibility in developing new applications of AI, even when it’s making decisions about human lives or interacting with humans in ways that can risk death such as we’ve seen with self-driving cars. Across history, many documents have set out to establish rules to govern conduct to minimize harm from human interactions, especially in the face of asynchronous power relationships (government over individuals, advantaged over disadvantaged, privileged over disenfranchised, expert over unskilled, etc.).

Some of these now have the force of international law behind them. Regrettably, documents like the Nuremberg Code, the Universal Declaration of Human Rights, and the Declaration of Helsinki attempted to restore moral order following episodes of human rights disasters. Across these documents, we discovered a few high-level principles relevant to our work that has guided our thinking:

• Respect for individuals

• Safety and security of people

• Respect for individuals’ right to privacy, protection of data, freedom of expression and participation in cultural life

• Equality and fairness

• Equitable distribution of benefits to society that must not come at the cost of some

Establishing rules early on would be much better, minimising the harm we inflict. Given our location in the heart of Silicon Valley in the United States, we necessarily have a certain perspective on issues of technology and society. We are steeped in the latest innovations and have the chance to observe their positive and negative effects on populations and sub-populations of people.

Our approach when considering the potential effects from new technology is not to consult a list of simple ethical standards hoping to be nudged in the right direction. Real-world ethics are rarely simple. Ethical excellence begins by facing hard questions leading to complex discussions that deal with difficult trade-offs. Moreover, questions and discussions must place the ethical considerations in the context of the expected use of a particular technology.

At PARC, we have established a committee of relative experts and a process within the organization to help researchers and designers negotiate the ethical landscapes of their projects. Commit- tee members continue their own ethical education and refine their expertise through training, topical readings with discussion, and ongoing discussions about ethically relevant incidents and events in the world.

Through these principles and processes, we aim to include the discussion around AI Ethics as a natural component of AI projects. We want our habit of considering ethics and mitigating potential risks to be routine for the teams working with AI. We believe that with the experience of discussing possible outcomes, extensive understanding of the experiences of all stakeholders involved, and creating a space to expose our biases to scrutiny we nurture an innate competency of ethical design and engineering